Pentaho Data Integration Tutorial for Beginners - Part 1: Group By vs Memory Group By

Hi my fellow Devs! Sincere apologies for my absence from this blog for a long time. I will be regularly posting useful Business Intelligence tutorials in simple language. I am starting with a mini tutorial series in parts for beginners using Pentaho Data Integration, an ETL software.

For IT professionals who have worked in other ETL tools as well as absolute beginners starting their IT career in data analytics, PDI is a very simple to learn ETL program.

Here in this post, I have given a demo for few basic scenarios one may encounter while doing ETL development and how can we handle those scenarios using Pentaho Data Integration.

For IT professionals who have worked in other ETL tools as well as absolute beginners starting their IT career in data analytics, PDI is a very simple to learn ETL program.

Here in this post, I have given a demo for few basic scenarios one may encounter while doing ETL development and how can we handle those scenarios using Pentaho Data Integration.

Scenario#1: Aggregation of numerical data using Group By & Memory Group By

Aggregation is the process of combining data to get a summary details like Sum, Min, Max, Average, Median, etc. In this example, I am going to demonstrate Pentaho Group By with example use case with simple text file as source and the data set used for this example is given below:

Fig 1: Source data set

|

Requirement:

The task is to find the Department wise maximum salary amount from the given data. This can be achieved by using PDI Group By and Memory Group By steps. While both of them does the same work, using 'Group By' requires sorted data whereas 'Memory Group By' does not require sorted data to operate. If the rows to be grouped is so huge, it could not be handled within available memory capacity, 'Group By' step can be used along with 'Sort Rows' step instead of 'Memory Group By'.

Note:

If the data is from a database table, ideally we should be doing the aggregation within the database environment and use the aggregated data in PDI transformation as SQL Group By operation is generally more efficient than doing it in any ETL environment. Hence, PDI Group By/Memory Group By steps are more suitable for any data in which aggregation at the source is not possible eg. csv, txt, etc.

The image given above, is the design of the PDI transformation file that I created for demonstrating the aggregation operation using both Grouping steps (just to show that both of them work as expected and in actual development using any one of them is sufficient). Alright, lets build this Transformation, step by step.

Note:

If the data is from a database table, ideally we should be doing the aggregation within the database environment and use the aggregated data in PDI transformation as SQL Group By operation is generally more efficient than doing it in any ETL environment. Hence, PDI Group By/Memory Group By steps are more suitable for any data in which aggregation at the source is not possible eg. csv, txt, etc.

|

Fig 2: PDI Transformation Design

|

- Bringing the components/steps into Design canvas.

- Connecting steps using hops.

- Configuring the steps.

- Saving and running the Transformation file.

1. Bringing the Steps into Design Canvas:

The steps we need for this demo transformation are:

- Text File Input

- Sort Rows

- Group By

- Memory Group By

- Text File Output (one for Group By results & another for Memory Group By Results)

At first, we need to connect the Source data, which in our case is a simple .txt file. For this, we need to use Text File Input step. Simply, type the step name in the left side design explorer pane. Once the step appears in the list, drag the step and drop it in the design canvas area as shown in the below image.

On clicking the option, we will get a line with arrow at the mouse cursor. Simply click the Sort Rows step and two will be connected together. Similarly, click the Sort Rows and connect it with Group By step.

Note:

Note:

Any two steps can also be connected by selecting the first step and by holding the Shift key in the keyboard, move the cursor towards the second step, a hop will be linking two steps.

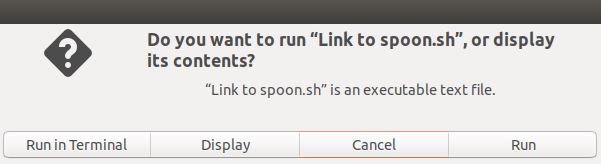

Now, connect all the steps as shown in Fig. 2. When connecting Text File Input with Memory Group By step, we will get a message as shown below:

Fig. 6: Copying the data in two streams

|

Simply select Copy option. What this does is, it passes two copies of the text file dataset: one to Sort Rows step and another to Memory Group By step.

3. Configuring the steps:

a. Text File Input:

Once, we brought this step, we need to configure it, which means we need to choose the source file folder path and name, metadata information such as field names and data types. Lets see this one by one.

Double click the Text file input step on the design canvas and in the window that appears, the first tab will be File tab. Here we need to specify the complete file path with file name. Click the Browse option and navigate to the required folder and choose the intended file. Once you have chosen the file, click Add button. The file name will be listed in the Selected Files section. In the same manner, if we have multiple files with same metadata i.e., name, datatype and order of of field/columns the same in all the files.

Having selected the file name, click the Add button and that will add the file name in the list in the bottom as shown in the screenshot below:

Double click the Text file input step on the design canvas and in the window that appears, the first tab will be File tab. Here we need to specify the complete file path with file name. Click the Browse option and navigate to the required folder and choose the intended file. Once you have chosen the file, click Add button. The file name will be listed in the Selected Files section. In the same manner, if we have multiple files with same metadata i.e., name, datatype and order of of field/columns the same in all the files.

Fig. 7: File Selection

|

Next, navigate to Content Tab. There we need to change the Separator value to Comma (,) instead of Semicolon (;) which is set by default. Leave all other settings as it is.

|

| Fig. 9: Setting the Separator |

|

| Fig. 10: Column metadata details |

Since our source dataset in this example is very simple, we don't have to configure much in this Text File input step. Hence, we can finally click the Ok button on the bottom and we are done with this step.

b. Sort Rows Step:

As I mentioned earlier, using Group By step requires the data to be sorted to give expected results. Since, our source data is from text file where sorting the data is not possible. Hence, we need to use Group By in association with Sort Rows step to get the sorted data.

Our goal in this example, is to find the Department wise maximum salary. Hence, the columns involved are Department and Salary. We need to sort Department column first since it is going to be used in Grouping operation and this column can be either in ascending or descending fashion.

Followed by Salary column which needs to be only in the Descending order as we are trying to find the maximum salary. The sort rows step will condition the dataset from Table File Input step in a way that Group By step can consume this conditioned (sorted) data.

Double click the Sort rows step and click the Get Fields button to get all the incoming columns. Keep only required columns and ensure to keep the grouping column first followed by aggregated column. See the screenshot below for more details.

d. Memory Group By Step:

This is an alternate option to Group By step but does not require the incoming data to be sorted. Configuring this step is same of that of the regular Group By step. |

| Fig. 13: Memory Group By step |

e. Text File Output step:

After defining the output file name and path, proceed to content tab. Select the separator for the final output file.

|

| Fig. 15: Text File Output step - 2 |

Configure the other Text File Output Step in the same manner but ensure to give a different output file name for that step.

Save the Transformation file (.ktr) in a folder of your choice and run the transformation by selecting Action >> Run. Alternatively, pressing F9 also does the same.

Upon executing the transformation, we can see green tick marks on each of the step indicating the steps are successfully executed without error. We can preview the results of any step post execution to understand how the records are manipulated on each step. I have given below the previews of Text File output data from Group By and Text File output data from Memory Group By in the below screenshots.

Save the Transformation file (.ktr) in a folder of your choice and run the transformation by selecting Action >> Run. Alternatively, pressing F9 also does the same.

Upon executing the transformation, we can see green tick marks on each of the step indicating the steps are successfully executed without error. We can preview the results of any step post execution to understand how the records are manipulated on each step. I have given below the previews of Text File output data from Group By and Text File output data from Memory Group By in the below screenshots.

|

| Fig. 16: Preview of Text File Output data from Group By step |

Now lets check the actual output files.

|

| Fig. 18: Final output file from Group By step |

Fig. 19: Final output file from Memory Group By step

|

In We can see that in output file for Group By step, the departments are arranged in descending (Z-A) order as a result of setting N in the Sort Rows step. Whereas in the output file from Memory Group By step, the departments are arranged in ascending order (A-Z) by default. But the numerical results are same in both the cases. This concludes our discussion on Group By vs Memory Group By steps in Pentaho Data Integration.

Summary:

In this post, we have seen how to implement aggregation operation using both Pentaho Data Integration Group By and Memory Group Steps in detail and the limitations. We can also use these steps to find Min, Max, Average values.

I have given this post more elaborately since this is the first post in the series. Please let me know in the comments section if this post helped and let me know if you want me to keep the tutorials short.