Install Pentaho Data Integration (CE) on Windows - A Detailed Step-by-Step Tutorial

Hi fellow Devs! Hope you had a wonderful day.

Today's tutorial is all about how to download and install Pentaho Data

Integration (Community Edition) in Windows laptop/desktop.

For those who are new to ETL process and PDI/Kettle, check my blog

post:

When I was new to

Pentaho, I was having difficulty in installing particularly the configuration

part. I have searched internet and learned those things by trial and error.

Hence I thought it will be worth sharing my experience.

The basic requirements

are:

- Pentaho Data Integration Community Edition

- JRE 1.8 and above (Java Runtime Environment)

- JDK 1.8 and above (Java Development Kit)

- Windows 7 and above (Though PDI can be installed in Linux or Mac OS as well, the scope of this post is limited to Windows Operating System)

Step-1: Downloading the Pentaho Data Integration (PDI/Kettle)

Software

The first step is to

download the PDI Community Edition from the Official Sourceforge download page. The recent

version is 8.2 at the time of writing this post and the download file is about

1.1 GB. The files will be downloaded as a zip file in the name

'pdi-ce-8.2.0.0.-342.zip'.

Step-2: Extracting the zip file

Extract the downloaded

zip file which will be in the Downloads folder . Right click the file and

choose the 'Extract Here' if you want it to get extracted in the downloads

folder.

If you want to choose a different folder, then right click and select

'Extract Files...' option and give the destination folder path. The default

name of the extracted folder would be 'data-integration'.

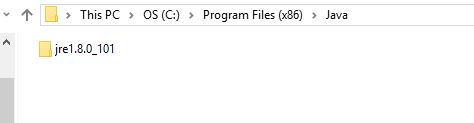

Step-3: Checking JRE version

Next step is to check

the Java Runtime Environment version in your system. First check if your

machine has Java installed. If not download it from official Java download

page.

If it is already

installed, check for JRE versions. To do this,

Go to C:\Program

Files\Java\ (in case if it is 32 bit Windows OS)

(or)

check it in C:\Program

Files (x86)\Java (in case of 64 bit OS).

|

| Fig 1. Screenshot showing the path containing JRE |

There will be a folder

within this folder path, starting with 'jre' followed by version number. This

version should be 1.8 or higher. If this is the case, then we are good to

proceed with the next step.

If your PC/Laptop does not have JRE, we need to

download the JRE 1.8 or higher version from the official Oracle JRE download page and install it. The recent version at the time of writing this post is

'1.9'. We need to download the correct

file according to OS type (32-bit/64-bit) and also select the file ending with

'.exe' extension as it does not require any extracting tools.

Once the file is downloaded, run the file and install JRE.

Step-4: Checking JDK version

The next step is to

check the version of JDK in your Windows PC. This step is similar to the

previous step.

Go to C:\Program Files\Java\

In the same folder, there will be a folder with a name similar to 'jdk1.8.0_191'. If you can find this folder, then we are good with this step. If the folder is not there, we have to download the JDK from the official Oracle JDK download page.

Like the previous step, we need to download the correct file according to our OS architecture type (32-bit/64-bit) and there will be multiple formats of the file will be available. Choose the file that ends with '.exe' extension and install the JDK by running the file after the download.

|

| Fig 2. Screenshot showing the path containing JDK folder |

Step-4 Setting the Environment Variables

The final step is to

configure the environment variables to point to the JRE folder path.

- Open My Computer (or) press Ctrl+E.

- In the left side pane, right click 'Computer' for Win 7 (or) 'This PC' for Win 10 and select 'Properties' option. A new window will appear showing the Processor, RAM capacity, Computer Name, etc.

- Alternatively, navigating to Control Panel\System and Security\System can bring the same window.

- On the left side pane, click the 'Advanced system settings' and it will bring the System Properties window. Go to 'Advanced' Tab and select 'Environment Variables' button.

- In the Environment variables window, click the new button.

- Give 'PENTAHO_JAVA' in upper case as variable name and

folder path pointing to java.exe under the jre folder as variable value

and click Ok. Please refer the screenshot below.

|

| Fig 3. Creating Environment variable - PENTAHO_JAVA |

Again, create a new variable in the same way, but set the variable name

as 'PENTAHO_JAVA_HOME' as variable name and jre folder path as

variable value like in the screenshot given below.

|

| Fig 4. Creating Environment variable - PENTAHO_JAVA_HOME |

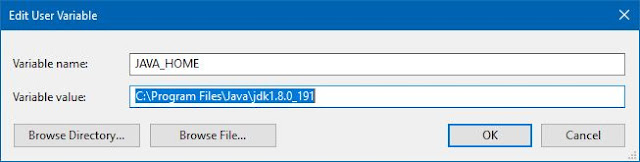

Finally, create another variable in the name JAVA_HOME and the variable value would be the path where the jdk folder is available (see Step-4).

|

| Fig 5. Creating Environment variable - JAVA_HOME |

Note:

In the above step,

example is given for 64-bit version of Windows. If your machine is 32-bit OS,

replace the 'C:\Program Files (x86)\Java' string with 'C:\Program Files\Java'

in the variable value for both the environment variables.

Restart your machine. Go

to 'data-integration' folder which we extracted in the Step-2 and search for

the file 'spoon.bat'. Double click it and PDI will open.

You can right click the

spoon.bat file and select send to --> Desktop (create shortcut). This helps

you to open PDI right from the desktop instead of going to data-integration

folder every time.

It may be slow to start

for the first time but it will not take more than 3-4 minutes. If you feel

difficulty in any of the above steps, please let me know in the comments area.

Happy Learning.

Troubleshooting

For Win 7 32-bit, if PDI

did not open after configuring as per step-4. Try this method.

Go to data-integration

folder, select spoon.bat file. Right-click it and select rename. Change the

file extension '.bat' to '.txt'. Now we can edit it in text editor like

Notepad. Search for 'xmx256m' and replace it with 'xmx1024m' and save the

file.

Again, change the file

extension back to '.bat' from '.txt'. Now double-click the spoon.bat file. It

will open PDI aka Kettle.

In case of any queries,

please let me know by writing it down in the comments section.