Basic Overview of Pentaho Data Integration - A Beginner's Guide

For folks who are in the

initial stages of learning ETL tools and workflow, PDI is one of the most

popular if not the best open-source ETL tool and it is extremely user-friendly

with nice and clean user-interface. It does not require any specific prior

programming language knowledge to work with.

The ETL jobs can be

developed completely by simply dragging & dropping necessary steps into

designing work-space or canvas and connecting them into a network using links

called 'Hops'. The developed jobs can be scheduled to run automatically

at set time intervals through the built-in scheduling features in the data

integrator server and job status can be monitored from any internet browser by

the authorized users and can send the job completion/failure status via email

notifications with execution report as attachment. Job scheduling can also be

performed using scheduling tools like Cron, Task-scheduler, etc.

Once you started using

it, you are going to love it for the simplicity and very powerful features it

packs. It can also be used for data-cleaning/conditioning requirements using

various text/numerical operations available.

In this post, lets dive

into features and look and feel of this tool.

Jobs Vs.

Transformations: What does each represent?

The term 'Job' under the

PDI context, represents a combination of one or more jobs and transformations.

Usually, the ETL code developed using PDI would have a single parent/master job

which calls subsequent child jobs and transformations.

The term

'Transformation' is the primary layer which contains the actual transformations

like sorting, any text operations, aggregation, filtering, formatting, removing

duplicates, etc. to be performed on the data pipeline based on the business

requirements.

The Job is the

high-level layer which has the workflow rules like order in which the dependent

jobs/transformations will be executed. Child jobs can be made to execute in

parallel/serial manner if required. There is a provision to divert/redirect the

workflow based on certain condition at Job level. Jobs are typically designed

with START step at the beginning and with Success step at the end. We can even

place an Abort Job step to end the flow if needed.

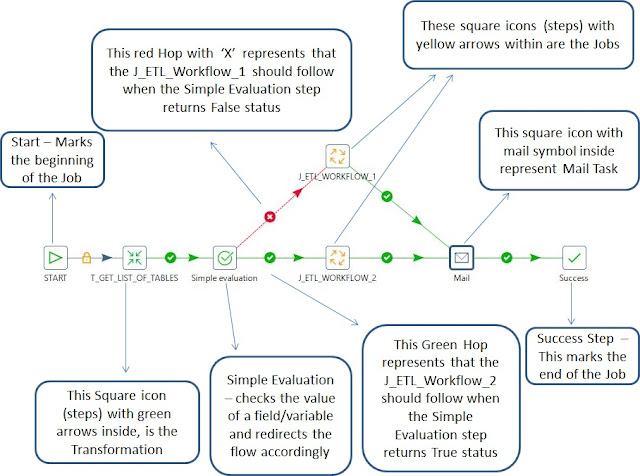

The below picture gives

an idea about the elements in a simple Job.

|

| Fig 1. Screenshot of a simple PDI Job |

In short, we usually

design any PDI/Kettle Job in a way that it calls the other Jobs and/or

Transformations.

Job takes care of :

- Workflow and execution of other jobs (this includes PDI

job/Transformation, Sql query/Javascript/Shell script execution, Oozie job

execution,etc.).

- Folder and file management like

creation/copying/deleting/moving files/folders, file transfer via

FTP/SFTP.

- Evaluating conditions like checking if a file exists in

the specified folder path, doing a simple evaluation eg. checking the

value of a parameter or a column.

- Checking if a table exists in a database.

- Checking no. of rows in a Database table.

- Truncating the records in a Database table.

On the other hand, a

Transformation manages/processes data:

- Extracting/Integration the data from various sources

like Databases and Files.

- Performing Lookup operation with reference data set.

- String manipulation by removing a portion of a string

value/concatenating an existing string with another string/replacing a

specific characters of a string with alternate characters.

- Number values manipulation by modifying the decimal

places.

- Converting Number format value to text format and

string to number (only valid numbers in text format).

- Removing the duplicate rows in a data set.

- Merging the rows from two different data sources.

- Replacing Null values with default values and vice

versa.

- Mapping the value of a column to another column.

- Sorting the rows.

- Creating a hash-sum value of a combination of values.

- Renaming/removing a column name.

- Loading the processed data to various targets like Database table/File output.

PDI Components

Pentaho Data Integration

(also called Kettle or PDI) follows a cooking-related theme in naming

convention for its components and they are listed below:

- Spoon: Jobs are created using Spoon. It is where the jobs and transformations are created. It has graphical user interface using which code can be developed by simple drag & drop gesture as mentioned earlier in this post.

- Pan: It

is a command-line interface where we can run the transformations.

- Kitchen: It

is a command-line interface where we can run the jobs.

- Carte: Helps to run the jobs/transformations in remote server mode.

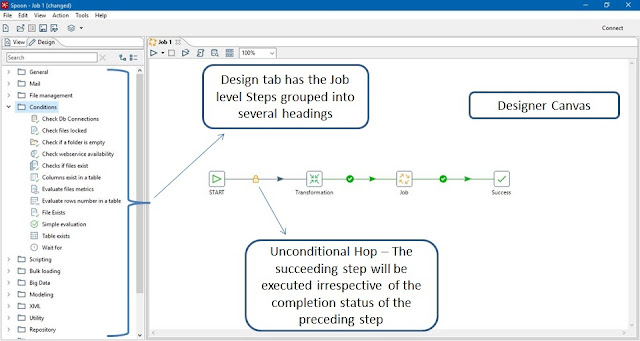

Spoon Tool

The Spoon is the PDI

Client and it is where the Jobs and Transformations are created. Lets have look

at the Spoon user interface:

|

| Fig. 2. Screenshot of Spoon User-Interface and welcome screen |

The above screen is shown when we open the Spoon tool. The left side pane has two tabs circled in blue in the above image: View (displays the list of objects present in current context) and Design (displays the list of steps that can be used to develop a PDI job or transformation).

Transformation Design

Transformation has steps

(which are square icons with customize-able names available in the design tab

in the left side pane which can be dropped into designer canvas) connected by

lines with arrows called Hops. The steps can be edited by double-clicking or by

right-clicking and selecting the Edit option.

Any two steps can be connected by a hop by clicking the first step and pressing the shift key in the keyboard and dragging the mouse pointer to second step. Alternatively we can join two steps simply by clicking the first step and four options will be displayed below the step in which we need to select the last option in the left and drag it to the second step.

We can disable the Hop without deleting it so that the succeeding step will not be executed. This feature comes handy when doing debugging and what-if analysis.

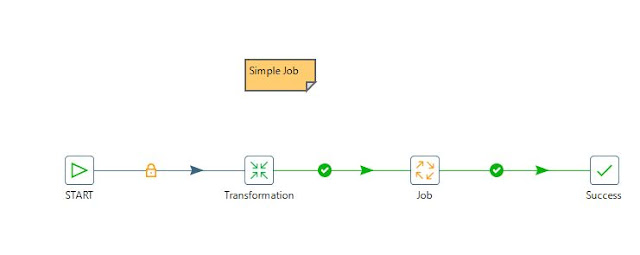

The central white

area is the designer canvas where we actually place the transformation/job

steps. An example is shown below:

|

| Fig. 3. Screenshot displaying a simple transformation |

Job Design

Designing a PDI job is similar to designing a PDI

transformation. A simple job design is shown below:

Similar to a transformation, a PDI job also has hops connecting

the steps but with additional workflow controlling options (which are not

available in the transformation) that can be accessed by right-clicking the hop

and selecting Evaluation containing the below settings:

1. Unconditional: It is shown as a lock

symbol and it means the step at the end (right side) of the hop is independent

of the step at the beginning (left side) of the hop which means the steps would

start executing without waiting for other steps to complete as soon as the job

is started.

2. Follow when result is

True: This is indicated by a

green circle with check symbol. This setting means the succeeding step should

be executed only when the preceding step returns a True status (in case of

simple evaluation step) or only when the preceding step is completed.

3. Follow when result is

False: This settings is opposite in function to previous setting and this

allows the succeeding step to execute only when the preceding step returns a

False status in simple evaluation. This mode is displayed as red circle with

'X' inside.

Notes

We can add descriptive comments on transformation

and jobs using a feature called Notes by right-clicking anywhere in the design

canvas and selecting new note.

|

| Fig. 5. Note placed in PDI job |

General Features

PDI is a java based tool

and it has cool features like support for connecting with wide range of sources

:

·

Databases

- Traditional RDBMS like Oracle, Sql Server, MySQL, etc.

·

Big

Data Databases like Hive

·

JSON

files

·

HL7

files

·

MS

Excel files

·

MS

Access files

·

csv

files

·

txt

files

·

xml

files

·

SAP

·

SAS

·

SalesForce

data

·

Yaml

·

RSS

feeds

·

Google

Analytics

·

Email

messages

It also has the ability to output the data to various kinds of target DB/files, Metadata Injection support, Row normalizer/denormalizer and we can create our own custom transformation step using Javascript.

It has very good

documentation of its various Transformation as well as Job level steps

available online that we can refer. Apart from this, there is also active

community forums on the web where we can find answers to questions related to

design practices, performance tuning, etc by the expert developer members.

Saving the Transformation/Job

The Jobs and Transformations created can be stored in three

ways:

1. Saving the jobs/transformation in the local file system:

The files will be stored in the local machine's file system. This

can be used for learning and training purposes. This is not ideal for

Production environments.

2. Saving in Pentaho Repository:

This method is recommended by Pentaho for Production environments.

It is available only for Enterprise Edition.

- It

has high-level security.

- Locking

of the files for writing while permitting specific users to make changes.

- Maintains version history of the jobs/transformations and in case of any mishap in the recently deployed (moving the developed the jobs/transformations to Production environment from Development after testing) files, we can revert back to previous version of the same jobs/transformations. In general, the Pentaho Repository is managed by Pentaho Administrator.

3. Using a database as a repository:

In this approach, we will use a database to store the ETL metadata

info and again this is also not supported or recommended by Pentaho for

Production usage.

Product License

PDI aka Kettle comes

with two licensing options:

- Community

Edition (CE)

- Enterprise

Edition (EE)

The Community Edition has all the features that are present in Enterprise Edition but the Enterprise Edition comes with additional plugins and product support.

Summary of the Post

To Summarize the various topics that we

have seen in this post,

1. General Overview of PDI as an ETL tool.

2. Detailed differences between a PDI Job

and Transformation.

3. User Interface and layout of the Spoon

window.

4. General idea on how a Pentaho Job

and Transformation would look like using simple examples.

5. List of the sources where the data can

be extracted and other features.

6. Repository types.

7. PDI License options.

Even if you had already worked with some

other ETL tools, you would definitely think why other tools are not this

simple. Even I felt the same.We can easily prototype our design ideas

quickly. In the upcoming posts, we will be working with Community Edition

of Kettle which is open-source and free to download.

Please feel free to comment your thoughts

about this post, that way I can update the comments. Also let me know in the

comments section if you want me to explain any topics on PDI.

Can you plz Explain what is the difference between pentaho report designer and Pentaho dashboard(Business Intellegence server)and also pentaho data integration

ReplyDeleteHi Suma! Thanks for reaching out. Pentaho Business Analytics Platform consists of collection of tools to integrate, standardize, visualize and analyze the business data.

DeletePentaho Data Integration (PDI also called as Kettle), Pentaho Report Designer are some of the tools within this platform.

Pentaho Data Integration gives the ETL (Extract, Transform and Load) engine to integrate data from several sources, clean and filter any junk data and transform into useful format and load them to finally to desired destination file or database. It is a desktop application.

Pentaho Report Designer is used to create business reports based on data from variety of sources. It consists of tables, charts and graphs which helps Business team to get useful insights on their data and take critical business decisions. It is also a desktop application.

Speaking of Business Intelligence Server, it is a server application which hosts the content published from desktop applications, such as pentaho reports, data integration jobs and transformation files and also provides scheduling feature to automate regular ETL jobs. A dashboard is created in the BI server, which is a single page report containing key features from Pentaho reports, to give a quick glimpse without having to go through multi paged reports.

Thank you very much sir..your explanation is very understandable,neat and clean.

DeleteCould you plz tell me what is the use of Pentaho data integration why because you said that Pentaho report designer is to create a business reports by using charts and graphs and Dashboard was created in BI server,which is a single page report.

what should PDI do..?

Hi Suma! PDI is n ETL tool and it does not have visualization capabilities. Using PDI, we can extract from several sources, transform the data as per the business requirements and finally load them in a destination which can be a Database or file. It helps in data migration as well.

DeleteThanks for Responding sir..

ReplyDeleteIt means a lot..!